Databricks Foreign Catalogs

A deep dive into Databricks Foreign Catalogs for Glue Iceberg table access

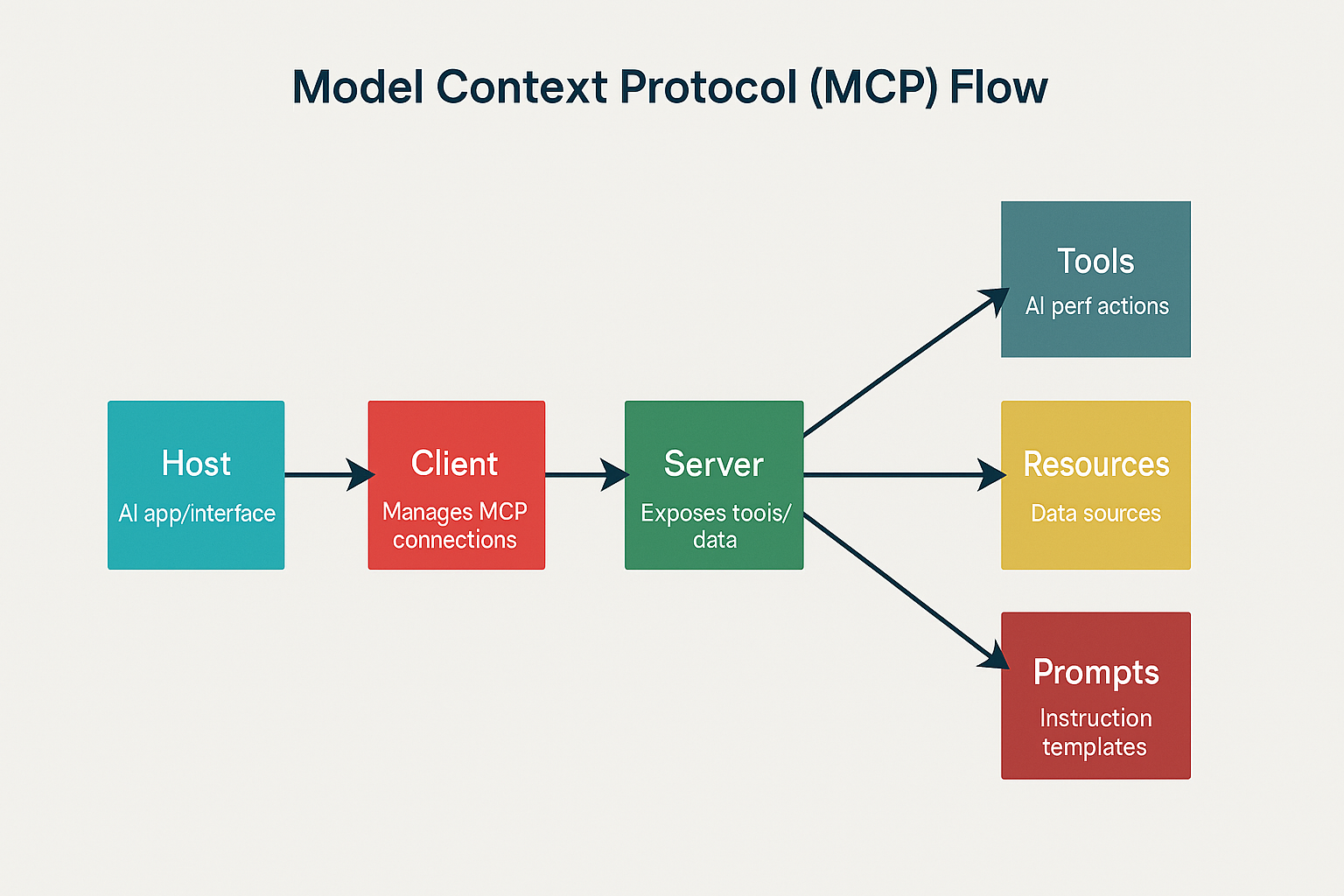

MCP is an open standard (Anthropic 2024) that lets any AI system discover and securely use tools, data and prompts—without bespoke connectors for every integration.

MCP is a universal protocol that connects large-language-model (LLM)–powered apps to external tools, data and services through a single, consistent interface. It eliminates brittle "point-to-point" integrations by standardising discovery, context sharing and permissioning across the entire stack.

| Challenge with REST | How MCP Solves It |

|---|---|

| 1 · Dynamic context – REST is stateless; LLM agents need memory across multi-step workflows. | Built-in session & conversation context lets agents "think" over extended tasks. |

| 2 · N × M integrations – Every new tool ↔ every new AI means exponential connectors. | "Build once, connect many" architecture dramatically cuts integration work. |

| 3 · Intent & usage metadata – APIs tell what you can call, not when / why. | MCP bundles prompts & examples so agents know how to use each tool. |

| 4 · Enterprise-grade security – REST lacks fine-grained, human-readable scopes. | Consent flows & granular scopes are baked into the spec. |

| Component | Role |

|---|---|

| Host | Front-end AI interface (chatbot, IDE, mobile app). |

| Client | Maintains the socket / Web-RPC connection to an MCP server. |

| Server | Publishes tool catalogue, resources and prompts. |

| Tools | Discrete actions the AI can invoke (e.g., "create-ticket", "send-email"). |

| Resources | Data sources such as CRMs, wikis, or databases. |

| Prompts | Instruction templates guiding the AI's behaviour with each tool or dataset. |

Figure 1 — High-level data-flow: the Host talks to a Client which in turn connects to an MCP Server exposing Tools, Resources and Prompts.

MCP is poised to become the backbone for context-aware, tool-using AI. Teams that adopt it early can cut integration cost, tighten security and unlock sophisticated autonomous workflows.

Read more about the latest and greatest work Rearc has been up to.

A deep dive into Databricks Foreign Catalogs for Glue Iceberg table access

Learn how we built Rearc-Cast, an automated system that creates podcasts, generates social summaries, and recommends relevant content.

Overview of the Talent Pipeline Analysis Rippling app

An AI-powered bias detection program, which takes inputted PDFs and extracts the different forms of bias found (narrative, sentiment, slant, coverage depth, etc.).

Tell us more about your custom needs.

We’ll get back to you, really fast

Kick-off meeting