Databricks Foreign Catalogs

A deep dive into Databricks Foreign Catalogs for Glue Iceberg table access

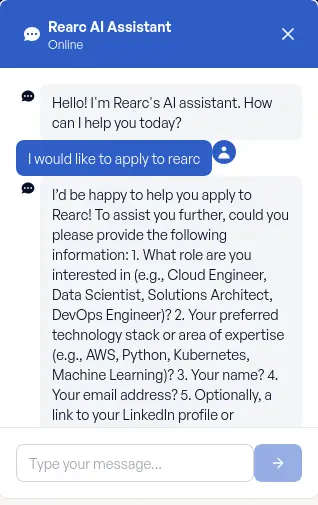

Hello everyone, my name is Sanjiv Raote and I'm currently pursuing a B.A. in Data Science and AI at the University of Miami, FL. As an intern at Rearc I worked on an exciting project that involved developing and deploying an AI ChatAgent for the company's website. The main goal of the project was to create an effective way to collect the information of potential candidates interested in working at Rearc.

Imagine a website where visitors can get instant, relevant answers to their questions about the company or career opportunities. That was the vision I had while creating Rearc's AI Chat Agent and is also why I chose GPT-4o-mini to power the chatbot. I found GPT-4o-mini to be the perfect combination between cost effectiveness and response quality.

The chatbot is built using multiple AWS services with a Lambda function for the core chatbot logic.

WebsiteChatAgentHistory table).Building this system from the ground up, especially as an intern, involved navigating and overcoming a series of significant technical hurdles in AWS:

One of the most stubborn issues was resolving Runtime.ImportModuleError: No module named 'pydantic_core._pydantic_core'. This error, tied to core Python libraries (pydantic, openai, langchain), stemmed from a incompatibility between dependencies built on a Windows development environment and the Linux runtime used by AWS Lambda.

manylinux compatible dependencies were generated instead of the windows versions.Getting the Lambda function to securely communicate with external internet resources (like OpenAI) and private databases (like Aurora for RAG, in a later phase) while inside a VPC was a major test. Initially this resulted in a 504 Endpoint request timed out error when in the VPC which indicated that Lambda could not communicate with the internet.

Ensuring precise access controls and service configurations was a continuous challenge:

secretsmanager:GetSecretValue denials required debugging of IAM policies, ensuring exact ARN matches for all of my AWS resources and proper policy attachment to the Lambda's execution role.ValidationException errors for DynamoDB involved attention to detail when defining the table names (e.g., exact case-sensitive primary key names like SessionId and HistoryId) and ensuring the Lambda code's boto3 calls perfectly matched those names.

In its current state the AI ChatAgent is operational, It intelligently responds to user prompts, maintains conversation context, and captures lead information for potential candidates.

Potential features I would add:

pgvector to allow the chatbot to parse through and answer questions based on Rearc's blog content.This project has been a great learning experience so I would like to give a huge thanks to:

Read more about the latest and greatest work Rearc has been up to.

A deep dive into Databricks Foreign Catalogs for Glue Iceberg table access

Learn how we built Rearc-Cast, an automated system that creates podcasts, generates social summaries, and recommends relevant content.

Overview of the Talent Pipeline Analysis Rippling app

An AI-powered bias detection program, which takes inputted PDFs and extracts the different forms of bias found (narrative, sentiment, slant, coverage depth, etc.).

Tell us more about your custom needs.

We’ll get back to you, really fast

Kick-off meeting