Rearc-Cast: AI-Powered Content Intelligence System

Learn how we built Rearc-Cast, an automated system that creates podcasts, generates social summaries, and recommends relevant content.

The Moscone Center was bustling, and conference-goers were greeted by the sounds of “Yoshimi Battles the Pink Robots” by The Flaming Lips as you entered the doors to the event. I like to think the DJ made this creative choice in response to the overwhelming presence of a clear message from Databricks to event goers: AI agents are here, and they’re ready to become a viable part of your organization’s strategy.

Maybe Yoshimi didn’t need to learn karate after all, as it appears the “robots” are fighting on our side this time around.

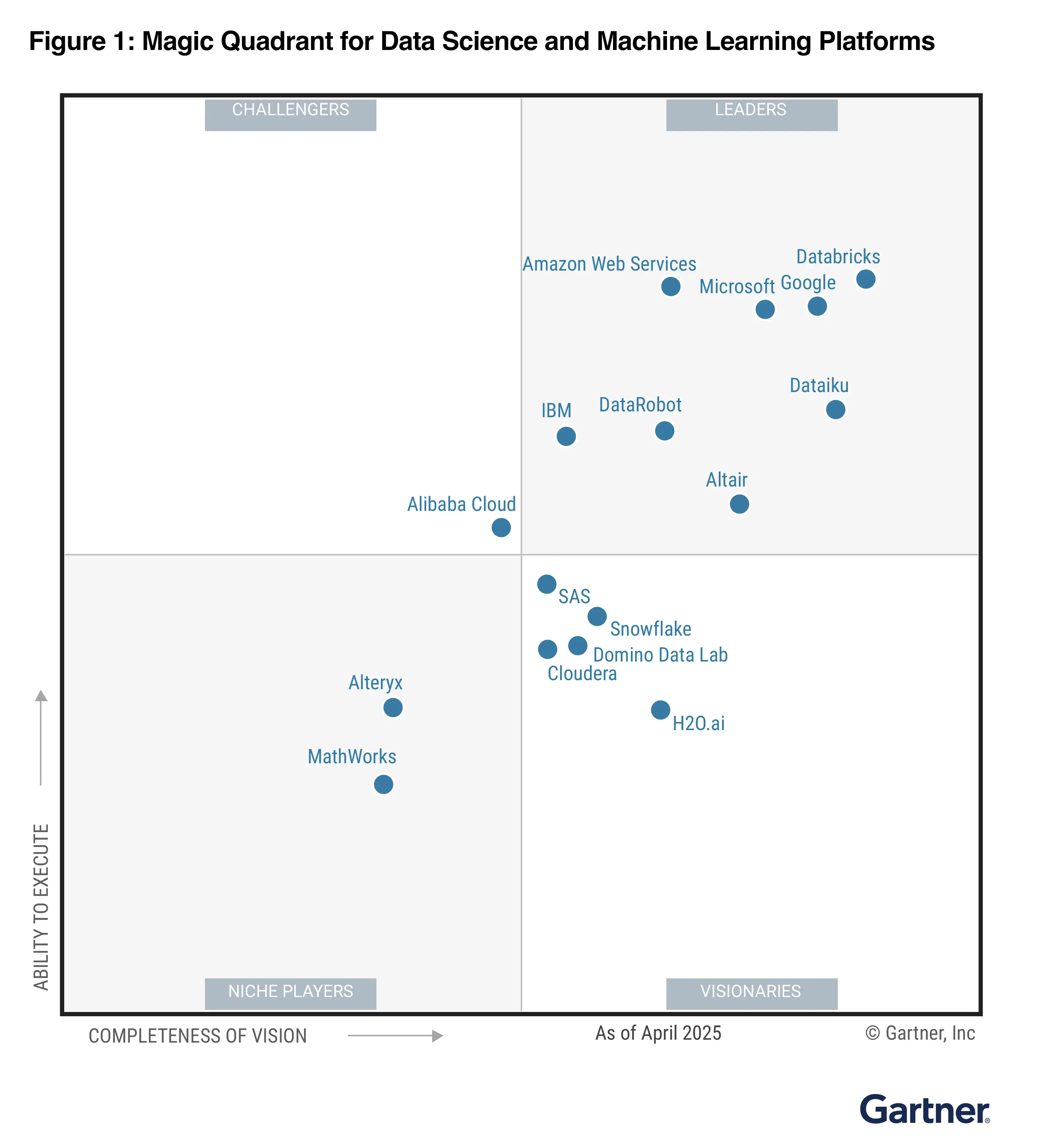

A couple weeks before this year's Data and AI Summit, Databricks was named the leader in the 2025 Gartner Magic Quadrant for Data Science and Machine Learning Platforms. It’s impressive enough to be competing with the likes of AWS, GCP, and Microsoft Azure. Beating them, though, even by a slim margin, speaks volumes about the success customers are experiencing on the Databricks platform.

A couple weeks before this year's Data and AI Summit, Databricks was named the leader in the 2025 Gartner Magic Quadrant for Data Science and Machine Learning Platforms. It’s impressive enough to be competing with the likes of AWS, GCP, and Microsoft Azure. Beating them, though, even by a slim margin, speaks volumes about the success customers are experiencing on the Databricks platform.

It was clear Ali Ghodsi, the CEO of Databricks, had made it a mission to be a leader in this space by providing the most comprehensive and reliable AI platform, not just traditional ML workloads, but also for the rapidly expanding needs of GenAI, LLM and Agentic pipelines and applications. Clearly, Gartner agreed with this ranking, and this assessment seemed to reflect a quiet confidence: a sense that Databricks is even further ahead than the chart alone reveals.

Here are the announcements that will continue to set Databricks apart from the competition in this space:

Despite the functionality available prior to these releases, tracing complex agentic pipelines on the Databricks platform was a difficult task. This new feature set, combined with the redesigned UI, featuring trace tree visualizations and streamlined step views, makes debugging complex agentic applications much more intuitive and effective.

Once a GenAI application is in production, it’s important to continuously monitor the quality of responses being delivered to end users. This new enhancement allows you to use an LLM powered judge to determine if the responses delivered were a hallucination, whether the question the end user asked was actually resolved, and ensure answers were both safe and appropriate by evaluating the intention and sentiment of messages from both the user and the system. (Insert traces screenshot)

Traces aren’t the only feature getting the first class revamp. Prompts are now versioned and tracked with Git-like versioning, including commit messages and rollback support. Prompts can now be stored in Unity Catalog as a “function”, meaning you can leverage all the governance capabilities that come from UC and apply them directly to your prompts. With integrations into LangChain, LlamaIndex and other GenAI frameworks, this change now lets us collaborate on prompts easier, track lineage by linking prompts to experiments and their results, and build a prompt repository that spans your entire organization. With integrations to DSPy we expect this feature to be widely used for customers currently using the Databricks platform to maintain their AI apps.

Previously, when you wanted to train and fine-tune custom models on the Databricks platform, serverless compute was not the most efficient and compatible option. Now with the introduction of serverless compute supporting GPU acceleration, you can now run GPU-accelerated training jobs in serverless environments, thanks to the inclusion of three commonly used packages: CUDA, PyTorch, and Torchvision. While serverless compute with GPU acceleration does not replace the Databricks ML Runtime, it provides another option for a subset of workloads where you don’t need the full ML runtime and the resources it makes available.

Now you can build and deploy secure data and AI applications directly on the Databricks platform, backed by serverless architecture and with direct integration to Unity Catalog, DB SQL, model serving for deploying AI models and a number of other features from the Databricks platform. Prior you may have had to host your chatbot front end and application code in a separate environment and then integrate this back to the services you need from databricks. Now, you can host all of these in one place, and seamlessly deploy a Streamlit interface for your agentic pipelines to any relevant workspaces.

Lakebase is a fully managed, Postgres-compatible database service that brings traditional transactional workloads into the Databricks ecosystem. What makes Lakebase notable is its native integration with Unity Catalog and optimization for GenAI use cases, particularly Retrieval-Augmented Generation (RAG) and LLM-powered applications. With Lakebase, Databricks closes the gap between unstructured and structured data systems, giving organizations a unified and governed foundation for their AI workflows. This offering reinforces Databricks' commitment to being the single, cohesive platform for modern data and AI development. If you want to learn more, then check out this post from Lead Engineer Dan Noventa at Rearc that goes into more detail on Lakebase and some other topics from DAIS.

It’s clear Databricks wants to offer the most comprehensive platform possible, in a way that respects the needs of developers, analysts, and infrastructure teams just as much as those of end users, business leaders, and other stakeholders. If they’re all going to exist on the same platform, then no one’s experience should be hindered by making this move. From what we have seen thus far, Databricks understands this on a deep level, and we expect them to not only lead the pack in 2025 but to continue to shape the future of enterprise AI.

Read more about the latest and greatest work Rearc has been up to.

Learn how we built Rearc-Cast, an automated system that creates podcasts, generates social summaries, and recommends relevant content.

Overview of the Talent Pipeline Analysis Rippling app

An AI-powered bias detection program, which takes inputted PDFs and extracts the different forms of bias found (narrative, sentiment, slant, coverage depth, etc.).

The journey of building a serverless AI chatbot for Rearc.io

Tell us more about your custom needs.

We’ll get back to you, really fast

Kick-off meeting