Databricks Foreign Catalogs

A deep dive into Databricks Foreign Catalogs for Glue Iceberg table access

After you have set up your Databricks account and created your first workspaces, what’s next? In this blog post, we go rapid fire through seven default Databricks settings you should consider changing.

Even if you are a Databricks veteran, I’m sure you’ll find something here worth your time. Let’s dive into it!

You might be surprised to find out that by default, workspace admins can set up Databricks Workflows that run as any user in their workspace. This effectively means that workspace admins have the permissions of every user. Let’s change that!

Use the CLI command databricks settings restrict-workspace-admins on each workspace as an account admin to enable the setting.

Yes, you have to configure every workspace! Make it painless by automating via Terraform.

In any account created after May 1, 2025 that uses SSO, new users are automatically provisioned. While this streamlines the process for new users it may not be appropriate for your enterprise security concerts. Many organizations have structured processes for requesting access to systems, particularly ones that may contain sensitive information. Disable it by using the Databricks Account console and moving the slider from Enabled. Unfortunately, no API access currently exists for updating this.

You have your Databricks Account setup with SSO, everything is golden right? Well, perhaps not!

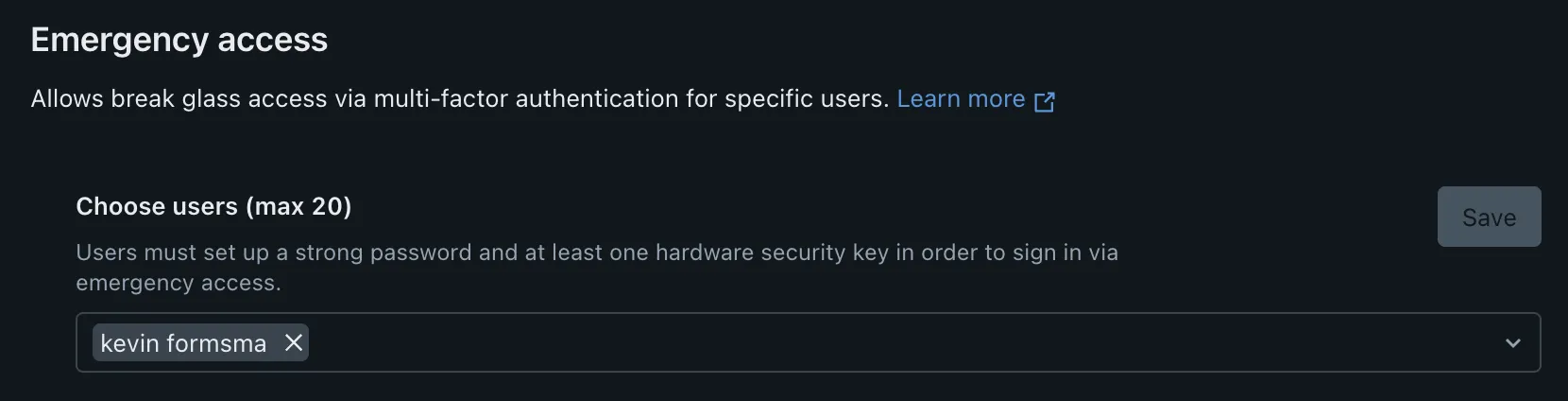

What happens if your SSO provider goes down? What if someone misconfigures an SSO change, locking you out?

These situations can be handled by setting up emergency access users. These users are able to login via password and MFA, bypassing SSO. By default, this list is empty. Prevent the need to contact support by setting up these users. For top security, utilize hardware MFA for these emergency access patterns.

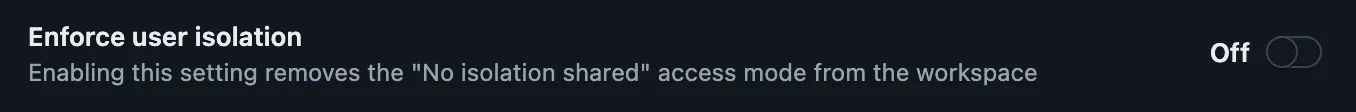

Newer Databricks compute types, Dedicated or Standard clusters provide enhanced security compared to traditional "No Isolation" Shared clusters. In these clusters, user permission tokens or credentials are accessible to any user running on the cluster (hence, no isolation). This can be problematic if you have privileged users running in this cluster.

If possible, you can simply disable this cluster type at the account level. If you require this cluster type due to various limitations with the others, you can enable some protection for workspace admin users. Finally, the Terraform provider has support for disabling this cluster type alongside other legacy features.

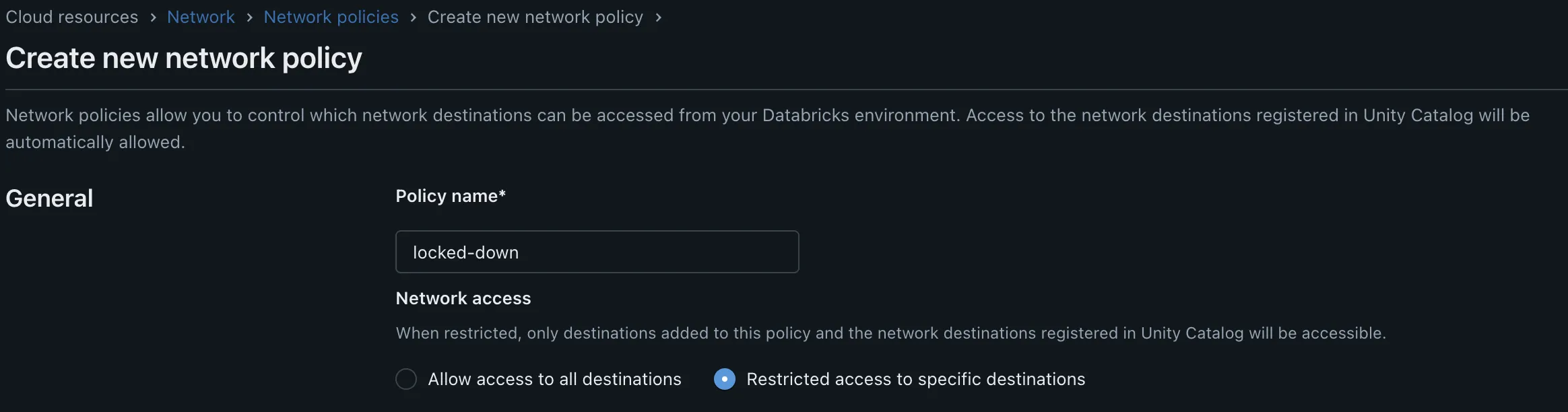

Serverless compute is great - but it operates outside your cloud compute plane, where established network controls that you take for granted might not exist. By default, serverless uses an "allow-all" egress policy. Perhaps this is right for your organization, but more likely you will want to limit to an allowed list of well accepted endpoints to prevent potential data exfiltration.

Set up an appropriate policy at the account level, and apply it to your workspaces. Go the distance by codifying your policies in Terraform.

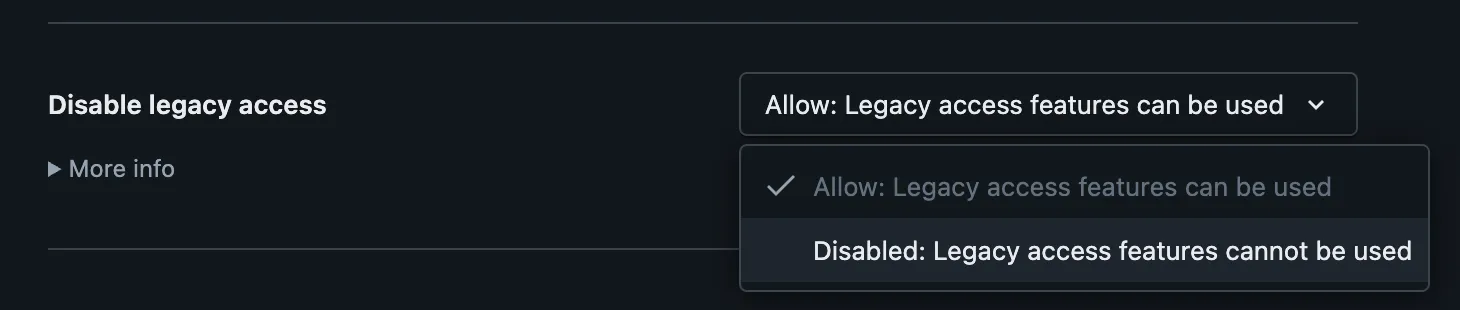

The modern Databricks Lakehouse is built around Unity Catalog. Especially for new accounts and workspaces, the existence of the legacy hive metastore just causes confusion among new Databricks users.

You can disable the legacy hive metastore at the account level for newly created workspaces or on workspace level for existing workspaces. Likewise, build it into your Terraform IaC with either the account or workspace resources.

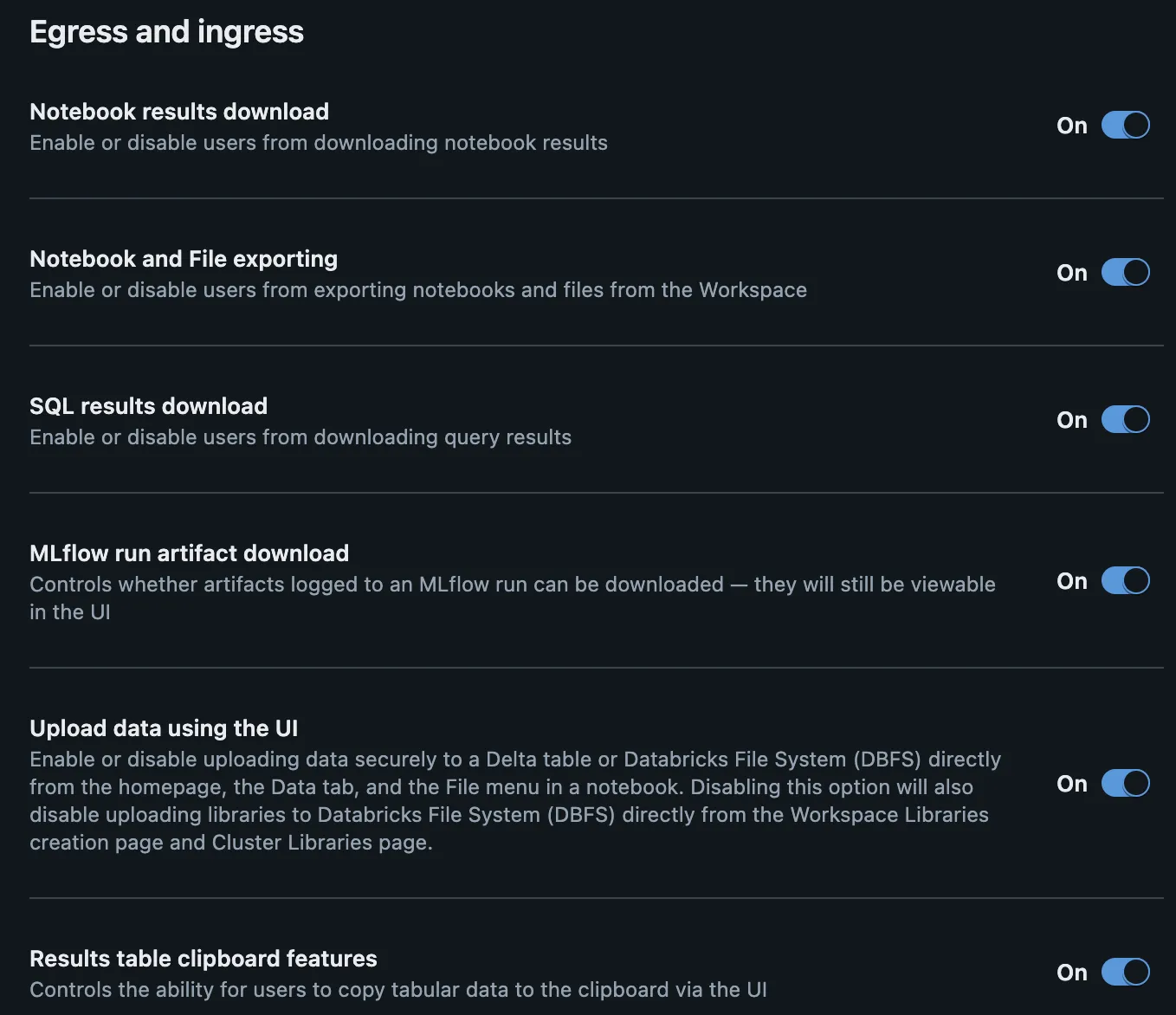

By default, you are able to interact with notebooks to download results. For instance, you can download the results of an SQL query or an MLflow artifact. Depending on your data use cases or environments, this might be too permissive. For example, in a production workspace you may not want to allow the download of results from the platform.

You can review the settings for controlling notebooks and queries, as well as restricting uploads.

Hopefully you learned about a setting or two that you need to review within your Databricks account. It’s hard for a platform like Databricks to create default settings that work for everyone. However, it’s essential for you to understand the configuration options available.

Continue your journey by taking a look through Databricks’ Security Best Practices checklist and harden your environment to meet your needs.

Read more about the latest and greatest work Rearc has been up to.

A deep dive into Databricks Foreign Catalogs for Glue Iceberg table access

Learn how we built Rearc-Cast, an automated system that creates podcasts, generates social summaries, and recommends relevant content.

Overview of the Talent Pipeline Analysis Rippling app

An AI-powered bias detection program, which takes inputted PDFs and extracts the different forms of bias found (narrative, sentiment, slant, coverage depth, etc.).

Tell us more about your custom needs.

We’ll get back to you, really fast

Kick-off meeting