Databricks Foreign Catalogs

A deep dive into Databricks Foreign Catalogs for Glue Iceberg table access

Simple Storage Service (S3) is AWS’ binary large object (blob) storage system, capable of storing an unlimited number of files (up to 5TB in size per file) in a highly durable, dependable, and secure manner. This allows S3 to serve a multitude of use cases, such as publicly accessible static website assets, private data lake files, service logs, system backups, and many others. Each of these uses will require different permission levels and controls. Website assets may be accessible to anyone, data lake files may only be accessible to analytics processes, and service logs may be generated by or need to be made available to third party monitoring and aggregation tools. In an enterprise environment, access control is paramount and in this post, we will look at one of the ways these controls are implemented in S3 - through object ownership.

S3 is composed of two core “building blocks”: buckets and objects, with a single bucket being capable of storing zero to many objects. The account that creates an S3 bucket is referred to as the “bucket owner”. Typically, the bucket owner will also retain ownership and control over stored objects. However this may not always be the case, and can be configured during bucket creation, or at a later point in time.

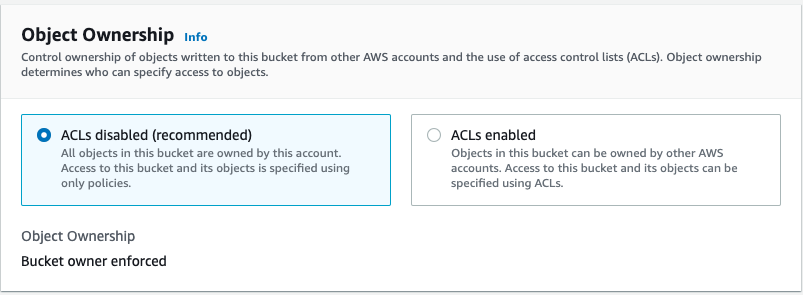

During the creation of an S3 Bucket, AWS allows you to declare who owns the objects in the bucket, at a high level. This applies whether you create the bucket through the AWS Console, Terraform, or any other IaC tool. The ownership set here is either ALWAYS the bucket owner, or sometimes a different owner. This is controlled by setting ACL’s to be disabled or enabled respectively.

Example of configuring bucket ownership in Terraform.

resource "aws_s3_bucket" "my-s3-tf-bucket" {

bucket = "my-s3-tf-bucket"

}

resource "aws_s3_bucket_ownership_controls" "my-s3-tf-bucket-control" {

bucket = aws_s3_bucket.my-s3-tf-bucket.id

rule {

object_ownership = "BucketOwnerPreferred" #implies ACLs disabled

}

}

resource "aws_s3_bucket_acl" "my-s3-tf-bucket-acl" {

depends_on = [aws_s3_bucket_ownership_controls.my-s3-tf-bucket-control]

bucket = aws_s3_bucket.my-s3-tf-bucket.id

acl = "private"

}

Historically, access control lists (ACLs) were used as the primary mechanism for granting permissions on S3 objects; ownership and read/write permissions for up to 100 entities were permitted. This has been superseded by Identity and Access Management (IAM) resource policies (which we will discuss shortly).

The default setting is for ACLs to be disabled, which removes the ability to grant object ownership controls to other accounts. Subsequently, the bucket owner is enforced as the object owner (note that objects may still be uploaded by accounts other than the bucket owner account). Alternatively, ACLs may be enabled, allowing for objects to be owned by either the bucket owner, or the “object writer”, the account that writes the object to the bucket.

Regardless of bucket owner, the object owner will always retain primary access control over an object including the ability to grant read/write access to other identities. Subsequently it is interesting to see that, the object owner may also delegate the ability to grant read/write access to other identifies, including the bucket owner. In doing so, the bucket owner can have similar rights as an object owner (in this instance), while another account still retains the official "owner” title and the ability to revoke these rights.

However, the bucket owner will always retain the ability to delete objects or deny access to objects that are owned by another account, as they are the ones footing the S3 bill (so make sure to have a backup!)

When ACLs are enabled, object owner can be specified as an acl parameter during write operations.

aws s3 put-object --bucket my-s3-bucket --key my-file.txt --acl bucket-owner-full-control

The bucket-owner-full-control ACL can be specified to indicate that the bucket owner should also own the object. Conversely, when ACLs are enabled, but an object owner is not specified, the object writer is assumed to be the object owner and assumes control of the object.

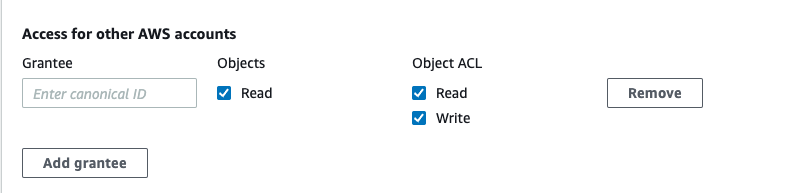

ACLs are maintained in an XML formatted structure, where rather than specifying the ARNs of other resources (users, groups, roles, services, etc.) who may access an object, they reference an AWS account’s “canonical user ID”, an alpha-numeric 64-bit string that uniquely identifies an entire AWS account. This lack of granularity limits the effectiveness of ACLs and emphasizes the need for resource-based policies.

Conversely, resource-based policies leverage AWS IAM policies and all the flexibility that they provide. IAM policies allow you to specify:

Unlike object ACLs, resource based policies, whether they broadly target an entire bucket or a specifically selected file, are declared in the bucket configuration. Additional permissions assigned to IAM principals can also be attached to other entities. When an API call, such as s3:GetObject is made (whether via AWS CLI or an HTTP request), all relevant permissions/policies are aggregated together and evaluated to determine if the call is authorized.

AWS assesses the resource and user policies in the following order:

While an S3 bucket policy may allow a specific identity (such as an account, user, or role) access to a bucket and select files, an IAM policy attached to the principal making the API request may still conditionally deny the call.

Bucket policies are also able to enforce access controls around networking, including limiting access to certain VPC Endpoints, IP addresses, or AWS Services. For example, if you are hosting an S3 bucket that receives transaction reports from a number of different customers, you can use conditions in your policy to enforce calls to specific prefix paths from a whitelisted VPC Endpoint.

{

"Version": "2012-10-17",

"Id": "S3Access",

"Statement": [

{

"Sid": "S3-Access-Via-Specific-VPCE",

"Principal": {

"AWS": ["arn:aws:iam::111122223333:user/customer1"]

},

"Action": ["s3:GetObject, s3:ListBucket"],

"Effect": "Allow",

"Resource": [

"arn:aws:s3:::my-:examplebucket/customer1",

"arn:aws:s3:::my-examplebucket/customer1/*"

],

"Condition": {

"StringEquals": {

"aws:SourceVpce": "vpce-customer1-aabbcc123456"

}

}

}

]

}

Alternatively, you may use S3 to host files to be publicly accessible for use on a commercial website. However, to improve performance and manage costs, you may wish to limit requests of these files to a CDN service such as AWS CloudFront or Cloudflare; CloudFront origin access control can be granted access to the bucket, while IP addresses belonging to Cloudflare can be whitelisted in the bucket policy to only requests originating from them.

{

"Version": "2012-10-17",

"Id": "S3AccessFromAWSCloudFrontandCloudflare",

"Statement": [

{

"Sid": "AllowCloudFrontOAI",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::cloudfront:user/<CloudFront OAI Identity abc123abc123>"

},

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::my-static-assets/images/*"

},

{

"Sid": "AllowIPCloudflare",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:GetObject",

"Resource": ["arn:aws:s3:::my-static-assets/images/*"],

"Condition": {

"IpAddress": {

"aws:SourceIp": [

"103.21.244.0/22",

"103.22.200.0/22",

"103.31.4.0/22",

"104.16.0.0/13",

"104.24.0.0/14",

"108.162.192.0/18",

"131.0.72.0/22",

"141.101.64.0/18",

"162.158.0.0/15",

"172.64.0.0/13",

"173.245.48.0/20",

"188.114.96.0/20",

"190.93.240.0/20",

"197.234.240.0/22",

"198.41.128.0/17"

]

}

}

}

]

}

Object Ownership is an often overlooked, but significant control in S3 configuration. While there are use cases for maintaining separate bucket and object ownership, limitations in configuration of ACLs and standardization of IAM policies across AWS’ services reinforces the utility of resource-based bucket and user policies.

Read more about the latest and greatest work Rearc has been up to.

A deep dive into Databricks Foreign Catalogs for Glue Iceberg table access

Learn how we built Rearc-Cast, an automated system that creates podcasts, generates social summaries, and recommends relevant content.

Overview of the Talent Pipeline Analysis Rippling app

An AI-powered bias detection program, which takes inputted PDFs and extracts the different forms of bias found (narrative, sentiment, slant, coverage depth, etc.).

Tell us more about your custom needs.

We’ll get back to you, really fast

Kick-off meeting